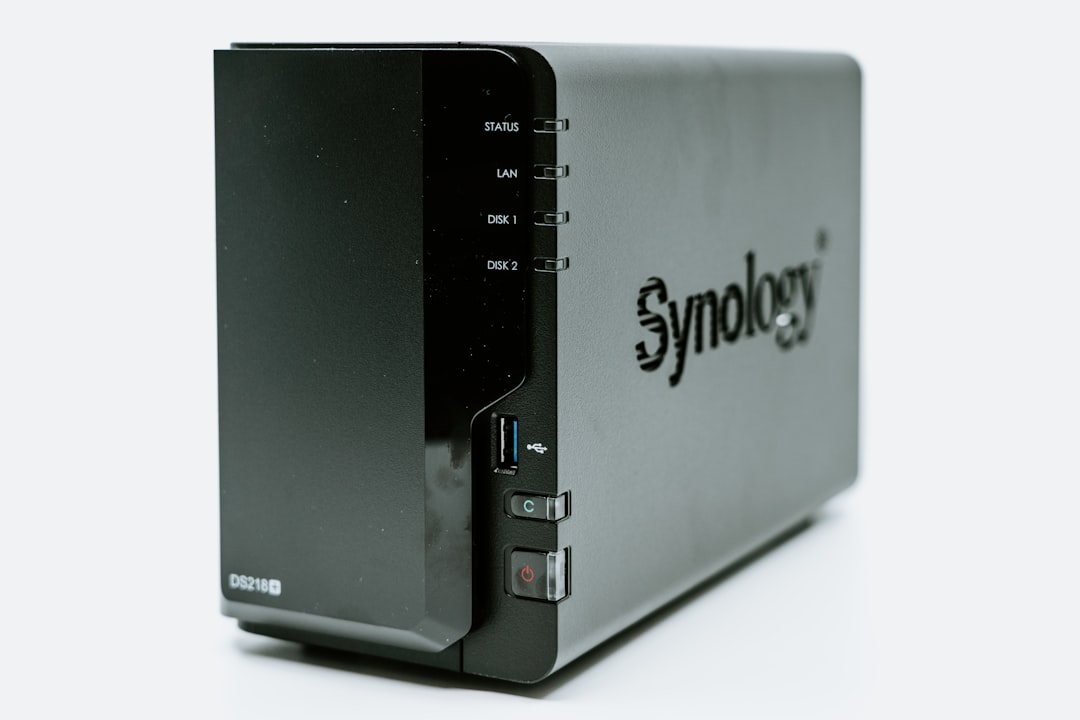

In a typical IT environment, scheduled snapshots are a fundamental part of any data protection strategy. They allow administrators to create point-in-time copies of data, enabling quick recovery from accidental deletions, data corruption, or ransomware attacks. However, snapshot management is not without its pitfalls—particularly when retention policies don’t evolve with data usage or storage capacity constraints. This article walks through a real-world case where improperly managed scheduled snapshots led to a NAS storage system becoming read-only, effectively halting productivity. We’ll explore how a simple yet effective rewrite of the retention policy reversed the situation, reclaimed capacity, and restored normal operations.

TLDR;

Scheduled snapshots on a NAS system spiraled out of control, eventually consuming all available storage and triggering the read-only safeguard, pausing operations. The root cause was an outdated retention policy that failed to account for data growth. By analyzing snapshot behavior and rewriting the retention rules based on actual usage patterns, the team reclaimed critical capacity and returned the system to stable operation. The lesson: snapshot management must be agile and adaptive, not static.

The Rise of Snapshot Chaos

Snapshots are designed to be seamless and invisible, quietly backing up data in the background. It’s easy to forget they exist—until they create a problem. In this case, a network-attached storage (NAS) system had been configured several months earlier to perform hourly snapshots, keep daily snapshots for 7 days, weekly for 4 weeks, and monthly for 12 months. At first, this setting seemed reasonable. It offered granular short-term recovery along with long-term historical retention.

But over the following months, data growth accelerated. Project volumes increased, development teams added more large datasets, and user-generated content multiplied. The snapshot schedule didn’t change. And more critically, no one was monitoring how the cumulative space used by snapshots was trending over time.

With the NAS system using copy-on-write or redirect-on-write snapshot technology, every modified block had to coexist with its preserved original—effectively increasing the number of stored blocks per file over time. As the snapshots piled up, so did the space they occupied, hidden from immediate view yet weighing down the system’s available capacity like an iceberg under the surface.

The system didn’t raise alarms until it was too late. Usage thresholds weren’t configured aggressively enough, and by the time the storage space reached 100% utilization, the file system automatically switched to read-only mode to prevent data corruption. That flip of a switch was the wake-up call.

The Critical Incident: When Everything Stopped

When the NAS storage became read-only, it had severe knock-on effects:

- Development teams couldn’t check in code or access test environments tied to the NAS

- Virtual machines hosted on the same storage began to fail

- File sharing for critical departments halted, affecting real-time collaboration

- Monitoring and backup processes began to fail left and right

The IT team scrambled to determine what was eating all the space. They started with the file system itself, looking for large log files or rogue directories. Nothing stood out. It wasn’t until a deeper dive using snapshot-aware tools that the true culprit was revealed: out-of-control snapshot accumulation.

What Went Wrong With the Retention Policy?

The original retention plan was built for a smaller, less active environment. The team had used default values offered by the NAS vendor, assuming the system would handle cleanup responsibly. But two things had happened:

- Data change rate had significantly increased, especially during business peaks.

- The volume and frequency of snapshots created excessive accumulation of changed blocks.

The snapshot policy looked good on paper—but it lacked key elements:

- No maximum space constraints defined for snapshot storage

- No expiration dates calculated based on space consumption

- No dynamic adjustments based on change rates or usage patterns

Snapshots were living longer than they were useful. Worse, they were protected by a higher-priority process that ensured they wouldn’t be deleted—even in low-space scenarios.

The Turning Point: Rewriting the Retention Policy

After identifying the root cause, the team quickly crafted a triage plan. Step one was to delete older snapshots manually, starting with monthly ones that were barely touched and rarely needed. This helped free up enough space to return the volume to a writable state, buying critical time.

Next came the real fix: a retention policy overhaul. The team reviewed a week’s worth of activity logs and block change rates, then created a new policy with the following characteristics:

- Hourly snapshots: conserved for only 24 hours

- Daily snapshots: retained for a sliding window of 5 days

- Weekly snapshots: kept for only 3 weeks

- Monthly snapshots: limited to 6 months

- Space budget cap: no more than 20% of total volume space could be used by snapshots

The team also implemented automated purging scripts with logging to avoid blind accumulation. If snapshots began to consume more than the predefined threshold, the oldest were purged, even if technically within the retention dates.

Lessons Learned and Best Practices

The incident was a harsh but valuable learning experience. Here are some lessons the team highlighted for posterity:

- Snapshot sprawl is real. Monitor not just how many snapshots exist, but how much space they occupy cumulatively.

- Retention policies should be reviewed quarterly, especially after data growth surges or changes in data usage patterns.

- Always define space-bound rules in addition to time-based ones. Don’t rely solely on aging logic.

- Alerts matter. Set up space usage alerts that consider snapshot growth, not just used capacity from user files.

- Think agility. Any policy tied to unpredictable growth must be flexible. Use scripts or storage management tools that adapt automatically.

Final Thoughts

It’s easy to forget about snapshots—until they become the digital equivalent of hoarding. What started as a silent, helpful mechanism can suddenly paralyze your infrastructure if not actively managed. As this case shows, the solution isn’t necessarily to avoid snapshots but to evolve their governance.

With a smarter retention strategy and better monitoring in place, the NAS environment returned to stability. More importantly, IT gained an appreciation not just for data protection, but for ensuring that protection doesn’t end up becoming a liability.

If you’re managing storage systems today, ask yourself: when did we last audit our snapshot policies? If the answer isn’t recently, it’s time for a closer look—and possibly a much-needed rewrite.